The very first electronic computers were idiosyncratic, one-off research installations.1 But as they entered the marketplace, organizations very quickly assimilated them into a pre-existing culture of data-processing – one in which all data and processes were represented as stacks of punched cards.

Herman Hollerith developed the first tabulator capable of reading and counting data based on holes in paper cards, for the United States census in the late nineteenth century. By the middle of the following century, a whole menagerie of descendants of that machine had proliferated across large businesses and government offices worldwide. Their common language was a card consisting of a series of columns, with each column (typically) representing a digit that could be punched in one of ten places to represent 0 through 9.2

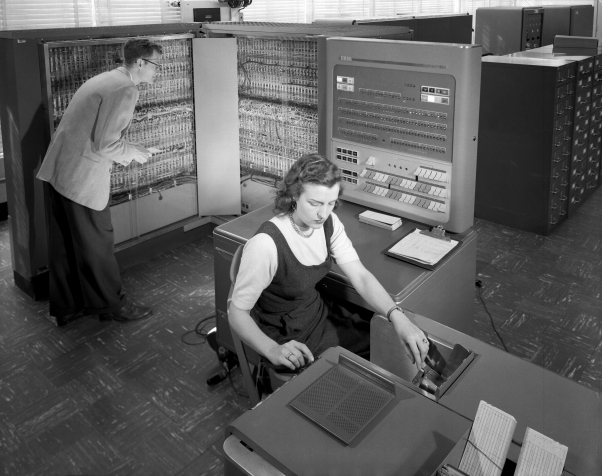

The punching of input data into cards required no expensive machinery, and could be distributed among the various offices across the organization which generated that data. When that data needed processing – for example, to compute the revenue numbers for the sales department’s quarterly report – the relevant cards could then be brought to a data center and be queued to be run through the necessary machines to produce a set of output data on cards or printed paper. Around the central processing machines – tabulators and calculators – lay a whole array of peripheral devices for gang punching, duplicating, sorting, and interpreting cards3.

By the latter half of the 1950s, virtually all computers were installed into this kind of “batch-processing” system. From the point of view of the typical end user in that sales department, very little had changed. One brought in a stack of cards to be processed, and received a printout or another stack of cards as the result of your job. In between, the cards were transformed from holes in paper into electronic signals and then back again, but that made little difference to you. IBM had been the dominant player in punched-card machinery, and remained the dominant player in electronic computers, in large part due to their existing sales relationships and wide range of peripheral equipment. They simply replaced their customers’ mechanical tabulators and calculators with a faster, more flexible data-processing engine.

This system of punched-card data processing had functioned smoothly for decades and showed no sign of decline – quite to the contrary. Yet in the late 1950s, a fringe subculture of computer researchers began to argue that this whole way of working should be overturned – the best way to use a computer, so they claimed, was to do so interactively. Rather than leaving a job and coming back later to pick up the results, the user should commune directly with the machine, and summon its powers as needed. In Capital, Marx described how industrial machines -merely set in motion by men – supplanted tools directly controlled by a human hand.4 Computers, however, began life as a machines. Only later did some of their users re-imagine them as tools.

This re-imagining did not originate in the data processing centers at the likes of the U.S. Census Bureau, Metropolitan Life, or U.S. Steel.5 For an organization trying to get this week’s payroll processed as efficiently and reliably as possible, it is hardly desirable to have someone disrupt that processing by futzing around on the computer. The value of being able to sit down at a console and just try things out was, however, more obvious to academic scientists and engineers, who wanted to explore a problem, to attack it from a variety of angles until a weak point was discovered, to alternate quickly between thought and action.

So, the ideas came from academic researchers. But the money to pay for using a computer in such a profligate fashion did not come from their department heads. The new subculture (one might even say, cult) of interactive computing grew out of a productive partnership between the American military and the elite among American universities. This mutually beneficial relationship began in World War II. Atomic weaponry, radar, and other wonder weapons had taught the military leadership that seemingly arcane academic preoccupations could turn out to have tremendous military significance. The coziness persisted for roughly a generation before disintegrating in the politics of another war, in Vietnam. In the interim, American academics had access to vast sums of money, with few questions asked, for almost anything that could be vaguely justified in relation to national defense.

For interactive computers, the justification started with a bomb.

Whirlwind and SAGE

On August 29, 1949, a Soviet research team successfully detonated their first nuclear weapon at a test site in Kazakhstan. Three days later a U.S. Air Force reconnaissance flight over the North Pacific picked up traces of radioactive material from that test in the atmosphere. The Soviets had the bomb, and their American rivals now knew it. Tensions between the two had already been running high for over a year, since the Soviets had cut off the land routes into the Western-controlled sectors of Berlin, in response to plans by the Western powers to rebuild Germany as a strong economic power.

The blockade ended in the spring of 1949, stymied by a massive operation by the Western Allies to supply the city by air. Tensions between the U.S. and the U.S.S.R. eased slightly. Nonetheless, those in charge of America’s national defense could not ignore the existence of a potential hostile power with access to nuclear weapons, especially given the ever-increasing size and range of strategic bombers. The U.S. had a chain of radar stations for detecting incoming aircraft, constructed on the Atlantic and Pacific coasts during World War II. But these posts used outdated technology, did not cover the northern approaches over Canada, and were not supported by any central system to coordinate air defense.

To devise a remedy for this situation, the Air Force (an independent branch of the U.S. military since 1947) convened an Air Defense Systems Engineering Committee (ADSEC). The group is better known to history as the Valley Committee, after it chair, George Valley. He was an MIT physicist, and a veteran of the wartime radar research group known as the Rad Lab, transformed after the war into the Research Laboratory of Electronics (RLE). After studying the problem with his committee for about a year, Valley issued his final report in October 1950.

One might guess that such a report would be a stodgy mess of bureaucratese, culminating in a carefully hedged and largely conservative proposal. Instead one finds a bracing piece of creative argumentation, and a radical and risky recommended course of action. It clearly owes a debt to another MIT professor, Norbert Weiner, who argued that the study of living creatures and machines could be unified under the single discipline of cybernetics. For Valley and his co-authors started from the premise that an air defense system is an organism – not metaphorically, but in actual fact. Radar stations serve as its sensory organs, interceptors and missiles as the effectors with which it can act in the world. Both operate under the control of a director, which uses sensory inputs to decide what actions to take.[^cyber] They further argued that a director comprised purely of human elements would be incapable of stopping hundreds of incoming aircraft across millions of square miles in a matter of minutes, and that therefore as many as possible of the directive functions should be automated.

Most radically, they concluded that the best means to automate the director would be via digital electronic computers, which could substitute for several areas of human judgment: analyzing incoming threats, directing weapons against those threats (calculating intercept courses and transmitting them to fighters in the air), and perhaps even strategizing about optimal response patterns. That computers would be suited to such a task was by no means obvious. At the time there existed exactly three functioning electronic computers in the entire United States, none of which came close to meeting the reliability requirements of a military system upon which millions of lives might hinge. Nor did any have the capability to respond to incoming data in real time. They were simply very fast, programmable number-crunchers.

Nonetheless, Valley had reason to believe that a real-time digital computer was possible, because he knew about Project Whirlwind. It had begun during the war at MIT’s Servomechanisms Laboratory, under a young graduate student name Jay Forrester. Its original goal was to build a general-purpose flight simulator, one that could be reconfigured to support new models of aircraft without being rebuilt from scratch each time. A colleague convinced Forrester that his simulator should use digital electronics to process inputs from the pilot and generate new output states for the instruments. Gradually the effort to build this high-speed digital computer outgrew and overshadowed the original objective. With the flight simulator forgotten and the war that had occasioned it long since over, Whirlwind’s overseers at the Office of Naval Research (ONR) were becoming disenchanted with its ever-growing budget and ever-receding completion date. In 1950, the ONR drastically slashed Forrester’s budget for the coming year, with the intention of cutting off the project completely after that.

But for George Valley, Whirlwind was a revelation. The actual Whirlwind computer had yet to become fully functional. But once it was… here was a computer that was not all disembodied mind. A computer with senses and effectors. An organism. Forrester had already mooted plans for expanding Whirlwind to become the centerpiece of a national military command-and-control system. To the computer experts on the ONR’s board, who saw computers as suited only to mathematical applications, this appeared grandiose and absurd. But it was just the kind of vision Valley was looking for, and he arrived just in time to save Whirlwind from oblivion.

Despite (or perhaps because of) its bold ambition, the Valley report had convinced the Air Force leadership, and they kicked off a massive new research and development program to first figure out how to build an air defense system centered on digital computers, and then build one. The Air Force partnered with MIT to carry out the core research effort, a natural choice given the presence of Whirlwind and the RLE, and a history of successful air-defense collaboration going back to the Rad Lab during World War II. They dubbed the new effort Project Lincoln, and constructed new research facility, Lincoln Laboratory, to house it at Hanscom Field, about fifteen miles northwest of Cambridge.

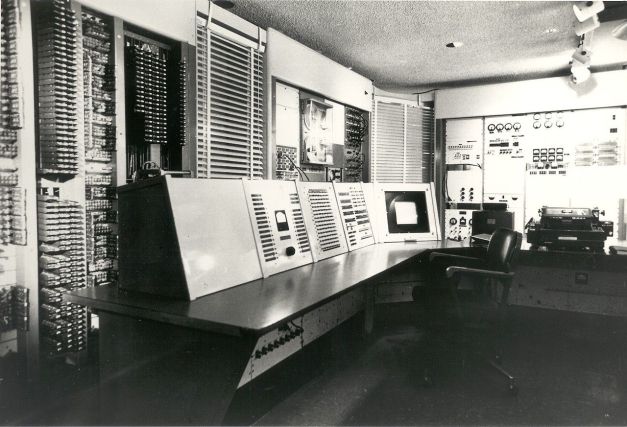

The Air Force called the overall computerized air defense project SAGE, a typically awkward military acronym for Semi-Automatic Ground Environment. Whirlwind would serve as the test-bed computer for proving out the concept before going to full-scale hardware production and deployment – a responsibility that would fall to IBM. The production version of Whirlwind to be built by IBM received the rather less evocative name of AN/FSQ-76.

By the time the Air Force drew up its plans for the full SAGE system in 1954, it consisted of a variety of radar installations, air bases, and anti-aircraft weapons, all controlled from twenty-three direction centers, massive blockhouses designed to survive an airstrike. To fill these centers, IBM would need to provide forty-six computers, not twenty-three, at a cost to the Air Force of many billions of dollars. That was because they still relied on vacuum tubes for their logic circuits, and vacuum tubes burnt out like light bulbs. Any of the tens of thousands of tubes in the active computer could fail at any moment. It would obviously be unacceptable to leave an entire sector of the nation’s air space undefended while technicians conducted their repairs, so a redundant machine was always kept at the ready.

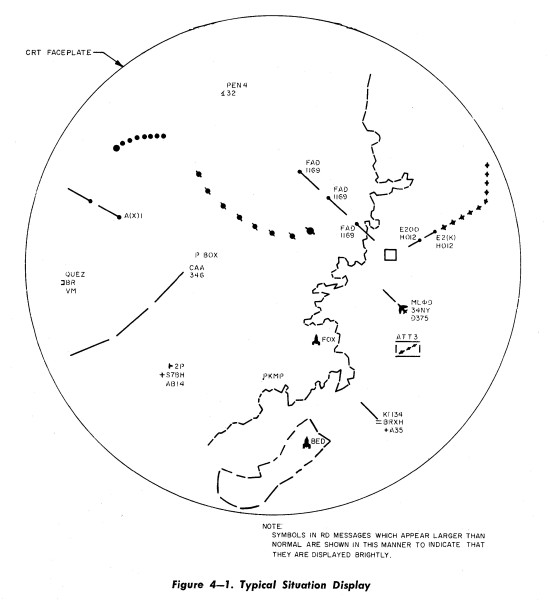

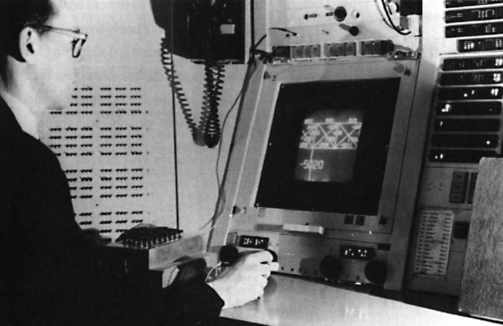

Each direction center contained dozens of operators in front of cathode-ray tube (CRT) screens, each monitoring a sub-sector of the surrounding airspace.

The computer tracked any potential airborne threats and rendered them as tracks onto the screens. An operator could use a light gun to pull up additional details on a track and issue commands for a defense response, which the computer transformed into a printed message to an available missile battery or air force base.

The Virus of Interactivity

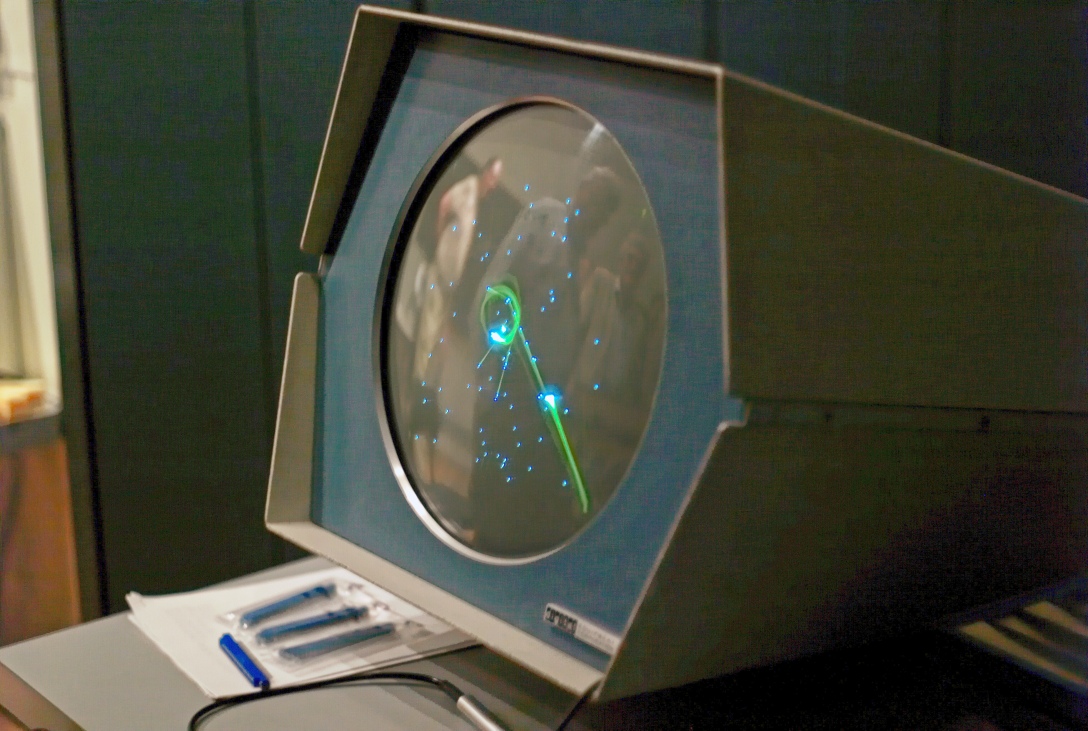

Given the nature of the SAGE system – a direct, real-time interaction between human operators and a digital computer via CRT, light gun, and console – it is not surprising that Lincoln Laboratory incubated the first cohort of interactive computing devotees. In fact, the entire computing culture at Lincoln Lab was an insular bubble, cut off from the batch-processing norm that was developing in the commercial world. Researchers used Whirlwind and its descendants by reserving a block of time when they would have exclusive access to the computer. They were accustomed to using their hands, eyes and ears to interact directly with it via switches, keyboards, brightly-lit screens, and even a loudspeaker, with no paper intermediary.

This odd little sub-culture spread into the wider world beyond Lincoln Lab like a virus, by direct physical contact. And if it was a virus, the closest thing to a patient zero was a young man named Wesley Clark. Clark dropped out of a graduate program in physics at Berkeley in 1949, to become a technician at a nuclear weapons plant. But he disliked the work. After reading several magazine articles about computers, started looking for a way to get into what seemed an exciting new field, full of potential. He learned about the need for computer expertise at Lincoln from an advertisement, and in 1951 he moved East to take a position under Forrester, now head of Lincoln’s Digital Computer Laboratory.

Clark joined the Advanced Development Group, a subsection of the lab that epitomized the kind of laxity that prevailed in the military-university relationship in this period. Though technically a part of the Lincoln universe, the Advanced Development team existed in a bubble within a bubble, isolated from the immediate needs of the SAGE project and free to pursue any computing work that could be loosely tied to Lincoln’s air-defense mission. Their main task in the early 1950s was to bring up the Memory Test Computer (MTC), designed to prove out a new, highly efficient and reliable form of digital storage known as core memory, which would replace the finicky CRT-based storage that Whirlwind used.

Since the MTC had no users other than the engineers bringing it to life, Clark had total control of the computer for hours at a time. Clark had become interested in the trendy cybernetic admixture of physics, physiology, and information theory through an older colleague, Belmont Farley, who had contacts with a biophysics group in the RLE, down in Cambridge. Clark and Farley spent many of their long sessions with the MTC building neural network models in software, to investigate the properties of self-organizing systems. Out of these experiences with Farley and the MTC, Clark began to extract certain axiomatic principles of computer engineering, from which he never strayed. In particular, he came to believe that “convenience [for the user] is the most important design factor.”7

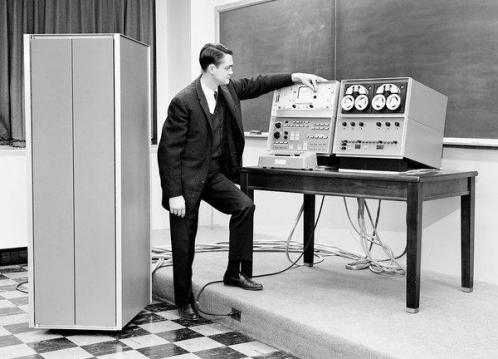

In 1955 Clark teamed up with Ken Olsen, one of the designers of the MTC, to propose a plan to build a new computer that would point the way to the next generation military command-and-control systems. By using a very large core memory for storage and transistors for its logic, it would be much more compact, reliable, and powerful than Whirlwind. They initially put forward a design they called TX-18, but the heads of Lincoln Lab rejected it as too costly and risky. Transistors had only entered the market a few years earlier, and only a handful of experimental computers had been built using transistor logic. So Clark and Olsen came back with a scaled-down proof-of-concept machine called TX-0, which was approved.

For Clark the ostensible command-and-control rationale for TX-0, though obviously necessary to sell it to Lincoln’s sponsors, held far less interest than its ability to further his ideas about computer design. For him interactive computing had ceased to be a mere fact of life at Lincoln and had become a norm – the correct way to build and use computers, especially for scientific work. He opened the TX-0 up for use by the MIT biophysicists, though their work had no connection to with Lincoln’s air defense mission, allowing them to use the machine’s visual display to analyze EEG data from sleep studies. No one seemed to mind.

TX-0 was successful enough that in 1956 Lincoln approved a full-scale transistorized computer, TX-2, with a massive two-million-bit memory. It would take about two years to complete this new project. After that, the virus began to escape the lab. With the TX-2 completed, Lincoln had no need for the earlier proof-of concept machine, and so agreed to ship the TX-0 down to Cambridge, on loan to the RLE. It was installed on the second floor, above the batch-processing Computation Center. And it immediately infected students and professors across the MIT campus, who clamored for time slots which would give them complete control of a computer.

It had already become clear that it was effectively impossible to write a computer program correctly on the first try. Moreover, for researchers exploring a new problem, it often wasn’t even clear at the outset what the correct behavior should be. But to get results from the Computation Center, one typically had to wait hours, or even overnight. To the dozens of nascent programmers on campus it was a revelation to go upstairs and be able to discover an error and instantly correct it, to try a new approach and instantly see improved results. Some used their time on TX-0 to complete serious science and engineering projects, but the joy of interactivity also called forth a more playful spirit. One student built a text editing program that he called Expensive Typewriter. Another student followed suite with Expensive Desk Calculator, which he used to do his numerical analysis homework.

Meanwhile Ken Olsen and another TX-0 engineer, Harlan Anderson, impatient with the slow progress on TX-2, decided to bring small-scale, interactive computing for scientists and engineers to the market. They left Lincoln to found Digital Equipment Corporation, setting up shop in a former textile mill on the Assabet River, about ten miles west of Lincoln. Their first computer, the PDP-1 (released in 1961), was effectively a TX-0 clone.

The TX-0 and Digital had begun to spread excitement about a new way of using computers beyond the confines of Lincoln Lab. Still, so far the interactive computing virus was geographically localized, contained to eastern Massachusetts. But that was about to change.

Further Reading

Lars Heide, Punched-Card Systems and the Early Information Explosion, 1880-1945 (2009)

Joseph November, Biomedical Computing (2012)

Kent C. Redmond and Thomas M. Smith, From Whirlwind to MITRE (2000)

M. Mitchell Waldrop, The Dream Machine (2001)

- Editorial Note: “The Unraveling, Part 2” was due to be the next segment in this series. However after repeated bouts of writer’s block, I’ve chosen to skip over it. Instead we will now move on to discuss how computers became enmeshed with telecommunications. I hope to be able to return to the story of AT&T at a later time. ↩

- A common language, that is, within a given manufacturer and era of machinery. ↩

- W.J. Eckert, Punched Card Methods in Scientific Computation (1980 [1940]), 4-24. ↩

- Karl Marx, Capital , vol. 1, Chapter 15. ↩

- All early purchasers of a UNIVAC, one of the first commercially available computers. ↩

- For Army-Navy / Fixed Special eQuipment, an acronym that makes Semi-Automated Ground Environment look quite precise by comparison. ↩

- Joseph November, Biomedical Computing, 132 ↩

- For Transistorized and eXperimental computer. Certainly beats AN/FSQ-7 for clarity. ↩

Nicely written and very informative, thank you for taking time to put this together.

LikeLike

[…] [previous] [next] […]

LikeLike

[…] Source: technicshistory.wordpress.com […]

LikeLike